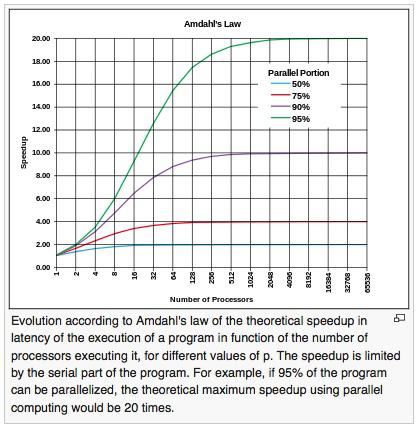

Strictly speaking, Amdahl’s Law isn’t only about speeding up serial programs by using parallel processing techniques, but in practice that’s often the case. Here’s a description from Wikipedia:

“Amdahl's law is often used in parallel computing to predict the theoretical speedup when using multiple processors. For example, if a program needs 20 hours using a single processor core, and a particular part of the program which takes one hour to execute cannot be parallelized, while the remaining 19 hours (p = 0.95) of execution time can be parallelized, then regardless of how many processors are devoted to a parallelized execution of this program, the minimum execution time cannot be less than that critical one hour. Hence, the theoretical speedup is limited to at most 20 times (1/(1 − p) = 20). For this reason parallel computing is relevant only for a low number of processors and very parallelizable programs.”

The image comes from this Wikipedia page. I just looked up that information after starting to watch this YouTube video about Amdahl’s Law, Ruby, and Scala, at Twitter.