Some advanced Lispers will cringe when someone says that a function “returns a value.” This is because Lisp derives from something called lambda calculus, which is a fundamental programming-like algebra developed by Alonzo Church.

In the lambda calculus you “run” a program by performing substitution rules on the starting program to determine the result of a function. Hence, the result of a set of functions just sort of magically appears by performing substitutions; never does a function consciously “decide” to return a value. Because of this, Lisp purists prefer to say that a function “evaluates to a result.”

Introduction

I like to start most lessons with a relevant quote, but in the case of “FP as Algebra,” several relevant quotes come to mind, so I’d like to share one more, from the book, Thinking Functionally with Haskell:

“FP has a simple mathematical basis that supports equational reasoning about the properties of programs.”

Because of functional programming’s main features — pure functions and immutable values — writing FP code is like writing algebraic equations. Because I always liked algebra and thought it was simple, this made FP appealing to me.

I’ll demonstrate what I mean in this lesson.

Goals

The first goal of this lesson is to give some examples of how FP code is like algebra.

A second goal of this lesson is to keep building an “FP way of thinking” about programming problems. The mindset of this lesson is that each pure function you write is like an algebraic equation, and then gluing those functions together to create a program is like combining a series of algebraic equations together to solve a math problem.

As this chapter’s introductory quote states, when you begin to think about your functions as “evaluating to a result,” you’ll be in a state of mind where you’re thinking about solving problems and writing your code as being like writing algebraic equations, and that’s a good thing.

Background: Algebra as a reason for “Going FP”

Hopefully you’ll find your own reasons for “Going FP,” but for me the lightbulb went on over my head when I realized that FP let me look at my code this way. Gluing pure functions together felt like combining a series of algebraic equations together — i.e., algebraic substitution — and because I always liked algebra, this was a good thing.

Before learning FP my background was in OOP. I first learned and then taught Java and OOP in the 1990s and early 2000s, and with that background I always looked at problems from the eyes of an OOP developer. That never made me see writing code as being like writing mathematical expressions. I always thought, “Okay, these things here are my objects (Pizza, Topping, Order), these are their behaviors (addTopping), and they hide their internal workings from other objects.

But since learning FP I now see my code as being more like algebra, and it’s a very different perspective. I clearly remember my first thought when I saw the connection between FP and algebra:

“Whoa ... if my function’s output depends solely on its input, well, shoot, I can always write one pure function. If I can write one pure function, then I can write another, and then another. And then once they’re all working I can glue them together to form a complete solution, like a series of equations. And since they’re all pure functions they can’t really fail — especially not because of hidden state issues — at least not if I test them properly.”

Sometimes programming can get a little overwhelming when you think about writing an entire application, but when I realized that I can always write one pure function, that gave me a tremendous sense of confidence.

As a programming tip, when you’re writing a pure function, think of that function as your world, your only concern in the entire world. Because “output depends only on input,” all you have to think about is that your function (your world) is given some inputs, and you need to create an algorithm to transform those inputs into the desired result.

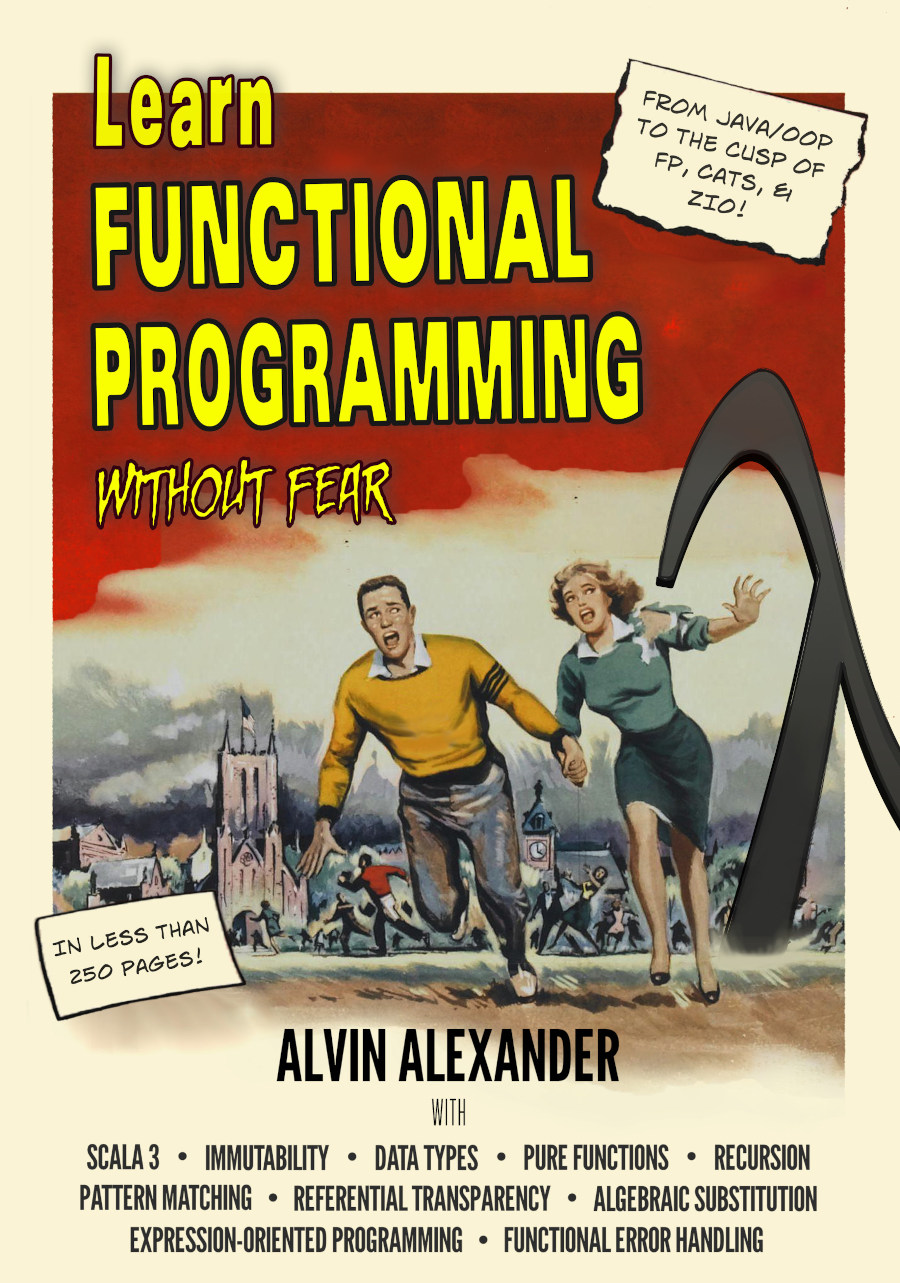

This post is sponsored by my new book,

Learn Functional Programming Without Fear.

Background: Defining algebra

It’s important to understand what “algebra” is so you can really internalize this lesson.

Unfortunately, trying to find a good definition of algebra is difficult because many people go right from the concept of “algebra” to “mathematics,” and that’s not what I have in mind. This informal definition of algebra by Daniel Eklund fits my way of thinking a little better:

For purposes of simplicity, let us define algebra to be two things: 1) a SET of objects (not “objects” as in object-oriented), and 2) the OPERATIONS used on those objects to create new objects from that set.

As emphasized, the key words in that sentence are set and operations. Mr. Eklund goes on to define “numeric algebra”:

In the case of numeric algebra — informally known as high-school algebra — the SET is the set of numbers (whether they be natural, rational, real, or complex) and the OPERATIONS used on these objects can be (but definitely not limited to be) addition or multiplication. The algebra of numbers is therefore the study of this set, and the laws by which these operators generate (or don’t generate) new members from this set.

As an example, a set of natural numbers is [0,1,2 ... infinity]. Operations on that set can be add, subtract, and multiply, and new members are generated using these operators, such as 1 + 2 yielding 3.

Mr. Eklund goes on to define other types of algebras, but for our purposes I’ll just share one more sentence:

The key thing to realize here is that an algebra lets us talk about the objects and the operations abstractly, and to consider the laws that these operations obey as they operate on the underlying set.

In Scala/FP, the “objects” Mr. Eklund refers to can be thought of as the built-in Scala types and the custom types you create, and the “operations” can be thought of as the pure functions you write that work with those types.

For instance, in a pizza store application, the “set” might include types like Pizza, Topping, Customer, and Order. To find the operations that work with that set, you have to think about the problem domain. In a pizza store you add toppings to a pizza that a customer wants, and then you can add one or more pizzas to an order for that customer. The types are your set (the nouns), and the functions you create define the only possible operations (verbs) that can manipulate that set.

Given that discussion, a Scala trait for a Pizza type might look like this:

trait Pizza {

def setCrustSize(s: CrustSize): Pizza

def setCrustType(t: CrustType): Pizza

def addTopping(t: Topping): Pizza

def removeTopping(t: Topping): Pizza

def getToppings(): Seq[Topping]

}

In the same way that 1 is a natural number and can work with operations like add and subtract, Pizza is a type and can work with the operations (methods) it defines.

From algebra to FP

If you haven’t worked with algebra in a while, it may help to see a few algebraic functions as a refresher:

f(x) = x + 1

f(x,y) = x + y

f(a,b,c,x) = a * x^2 + b*x + c

It’s easy to write those algebraic equations as pure functions in Scala/FP. Assuming that all the values are integers, they can be written as these functions in Scala:

def f(x: Int) = x + 1

def f(x: Int, y: Int) = x + y

def f(a: Int, b: Int, c: Int, x: Int) = a*x*x + b*x + c

These are pure functions (“output depends only on input”) that use only immutable values. This shows one way that FP is like algebra by starting with algebraic functions and then writing the Scala/FP versions of those functions.

From FP to algebra

Similarly I can start with Scala/FP code and show how it looks like algebraic equations. For example, take a look at these Scala expressions:

val emailDoc = getEmailFromServer(src)

val emailAddr = getAddr(emailDoc)

val domainName = getDomainName(emailAddr)

You can see how that code is like algebra if I add comments to it:

val emailDoc = getEmailFromServer(src) // val b = f(a)

val emailAddr = getAddr(emailDoc) // val c = g(b)

val domainName = getDomainName(emailAddr) // val d = h(c)

No matter what these functions do behind the scenes, they are essentially algebraic expressions, so you can reduce them just like you reduce mathematical expressions. Using simple substitution, the first two expressions can be combined to yield this:

val emailAddr = getAddr(getEmailFromServer(src))

val domainName = getDomainName(emailAddr)

Then those two expressions can be reduced to this:

val domainName = getDomainName(getAddr(getEmailFromServer(src)))

If you look at the comments I added to the code, you’ll see that I started with this:

val b = f(a)

val c = g(b)

val d = h(c)

and reduced it to this:

val d = h(g(f(a)))

I can make these substitutions because the code is written as a series of expressions that use pure functions.

You can write the code in the three lines, or perform the substitutions to end up with just one line. Either approach is valid, and equal.

What makes this possible is that other than getEmailFromServer(src), which is presumably an impure function, the code:

- Only uses pure functions (no side effects)

- Only uses immutable values

When your code is written like that, it really is just a series of algebraic equations.

Benefit: Algebra is predictable

A great thing about algebra is that the results of algebraic equations are incredibly predictable. For example, if you have a double function like this:

def double(i: Int) = i * 2

you can then call it with the number 1 an infinite number of times and it will always return 2. That may seem obvious, but hey, it’s how algebra works.

Because of this, you know that these things will always happen:

println(double(1)) // prints 2

println(double(2)) // " 4

println(double(3)) // " 6

And you also know that this can never happen:

println(double(1)) // prints 5 (can never happen)

println(double(1)) // prints 17 (can never happen)

With pure functions you can never have two different return values for the same input value(s). This can’t happen with pure functions, and it can’t happen with algebra, either.

A game: What can possibly go wrong?

A great thing about thinking about your code as algebra is that you can look at one of your pure functions and ask, “What can possibly go wrong with this function?” When you do so, I hope that tring to find any problems with it will be very difficult. After thinking about it long and hard I hope you get to the point of saying, “Well, I guess the JVM could run out of RAM (but that doesn’t have anything directly to do with my function).”

My point is that because it’s isolated from the rest of the world, it should be a real struggle to think about how your pure function can possibly fail. When you’re writing OOP code you have to concern yourself that “output does not only depend on input,” which means that you have to think about everything else in the application that can fail or be a problem — i.e., things like (a) state of the application outside the function’s scope, and (b) variables being mutated while you’re trying to use them — but with FP code you don’t have those concerns.

For example, imagine that you’re writing a multi-threaded imperative application, you’ve been given a list of users, and the purpose of your function is to sort that list of users. There are a lot of ways to sort lists, so that isn’t hard, but what happens to your code if that list of users is mutated by another thread while your function is trying to sort the list? For instance, imagine that 20 users are removed from the list while you’re trying to sort it; what will happen to your function?

You can demonstrate this problem for yourself. Remembering that Scala Array elements can be mutated, imagine that you have an Array[String] like this:

// 1 - a mutable sequence to work with

val arr = Array("one", "two", "three", "four", "five")

Then imagine that you begin printing the length of each string in a different thread, like this:

// 2 - start printing the numbers in a different thread

val thread = new Thread {

override def run {

printStringLength(arr)

}

}

thread.start

If you now mutate the array like this:

// 3 - mutate the sequence to see how that other thread works

Thread.sleep(100)

arr(3) = null

you can easily generate a NullPointerException if your printStringLength method looks like this:

def printStringLength(xs: Seq[String]) {

for (x <- xs) {

println(x.length)

Thread.sleep(200)

}

}

Conversely, it’s impossible to replicate this example if you use a Scala Vector or List. Because these sequences are immutable, you can’t accidentally mutate a sequence in one thread while it’s being used in another.

Transform as you copy, don’t mutate

In my previous Java/OOP life I mutated variables all the time. That’s how I did almost everything, and frankly, I didn’t know there was another way. I knew that a Java String was immutable, but based on my OOP thinking, I thought this was more of a pain than anything that was actually helpful to me.

But when you think of your code as algebra, you realize that mutating a variable has nothing to do with algebra. For instance, I never had a math instructor who said, “Okay, x is currently 10, but let’s go ahead and add 1 to it so x is now 11.” Instead what they said is, “Okay, we have x, which is 10, and what we’ll do is add 1 to it to get a new value y”:

x = 10

y = x + 1

In FP code you do the same thing. You never mutate x, but instead you use it as a foundation to create a new value. In Scala, you typically do this using the case class copy method.

Case class copy method

When you use a Scala case class you automatically get a copy method that supports this “transform as you copy” algebraic philosophy.

A simple way to demonstrate this is to show what happens when a person changes their name. I’ll demonstrate this with two variations of a Person class, first showing an OOP/imperative approach, and then showing an FP/algebraic approach.

With OOP code, when Jonathan Stuart Leibowitz changes his name to Jon Stewart, you might write code like this:

// oop design

class Person(var name: String)

// create an instance with the original name

var p = new Person("Jonathan Stuart Leibowitz")

// change the name by mutating the instance

p.name = "Jon Stewart"

In my OOP life I wrote code like that all the time and never gave it a second thought. But you just don’t do that sort of thing in algebra. Instead, what you do in FP/algebraic code is this:

// fp design

case class Person(name: String)

// create an instance with the original name

val p = Person("Jonathan Stuart Leibowitz")

// create a new instance with the "transform as you copy" approach

val p2 = p.copy(name = "Jon Stewart")

The FP approach uses the copy method to create a new value p2 from the original p, resulting in p2.name being “Jon Stewart.”

Mathematically, the last two lines of the FP approach are similar to this:

val x = a

val y = x + b

Or, if it helps to use the original value names, this:

val p = a

val p2 = p + b

It’s good to see the case class copy approach now, because (a) it’s a Scala/FP idiom, and (b) we’re going to use it a lot in this book.

As I mentioned earlier, I never thought of my OOP code as having the slightest thing to do with algebra. Now I think of it that way all the time, and that thought process is the result of writing pure functions and using only immutable variables.

Benefit: Automated property-based testing

In a preview of a later chapter, a nice benefit of coding in this style is that you can take advantage of something called “property-based testing,” what I’ll call “PBT” here. PBT is a way of testing your code in a manner similar to using JUnit, but instead of writing each individual test manually at a low level, you instead describe your function and let the PBT testing tool pound away at it. You can tell the PBT tool to throw 100 test values at your function, or 1,000, or many more, and because your function is a pure function — and therefore has this algebraic property to it — the PBT library can run tests for you.

Technically you can probably do the same thing with impure functions, but I find that this technique is much easier with pure functions.

I wrote a little about this in the Benefits of Functional Programming lesson, and I write much more about it later in this book, so I won’t write any more here. If you’re interested in more details at this time, see the ScalaCheck website and the property-based testing page on that site.

Later in this book: Algebraic Data Types

Another way that FP relates to algebra is with a concept known as Algebraic Data Types, or ADTs. Don’t worry about that name, ADT is a simple concept. For example, this code is an ADT:

sealed trait Bool

case object True extends Bool

case object False extends Bool

This code from the book, Beginning Scala, is also an ADT:

sealed trait Shape

case class Circle(radius: Double) extends Shape

case class Square(length: Double) extends Shape

case class Rectangle(h: Double, w: Double) extends Shape

I don’t want to get into this in much detail right now, I just wanted to let you know that there’s more algebra later in this book. The “algebra” in ADTs is described on the Haskell wiki like this:

“Algebraic” refers to the property that an Algebraic Data Type is created by “algebraic” operations. The “algebra” here is “sums” and “products” (of types).

Again, don’t fear the term; it’s another complicated-sounding term for a simple concept, as shown in these examples.

Summary

In this lesson I tried to show a few ways that functional programming is like algebra. I showed how simple algebraic functions can be written as pure functions in Scala, and I showed how a series of Scala expressions looks just like a series of algebraic functions. I also demonstrated how a series of expressions can be reduced using simple algebraic substitution. I also noted that in the future you’ll learn about a term named Algebraic Data Types.

The intent of this lesson is to help you keep building an “FP way of thinking” about programming problems. If you write your code using only pure functions and immutable variables, your code will natural migrate towards this algebraic way of thinking:

Pure Functions + Immutable Values == Algebra

Who knows, you may even start saying that your functions “evaluate to a result.”

What’s next

In the next chapter I’ll make a quick observation that when you write functional code, you’re also writing code that fits a style known as Expression-Oriented Programming.